Shouting into the Void

Why Reporting Abuse to Social Media Platforms Is So Hard and How to Fix It

Key Findings:

Writers and journalists regularly face harassment and threats to their free expression online, but when they try to report the abuse to digital platforms, they describe it as “shouting into the void.”

The mechanisms for reporting abuse online are deeply flawed and further traumatize and disempower those facing abuse, failing at every level to safeguard them and hold abusers to account.

This report outlines how platforms can build reporting mechanisms that are more user-friendly, accessible, transparent, efficient, and effective, with clearer and more regular communication between the user and the platform.

PEN America Experts:

Director, Digital Safety and Free Expression

External Experts:

Kat Lo

Program Manager, Digital Health Lab at Meedan

Introduction

When PEN America and Meedan asked writers, journalists, and creators about their experiences reporting online abuse to social media platforms, we heard again and again, over the past three years, about the deep frustration, exasperation, and harm caused by the reporting mechanisms themselves:

I do the reports because I don’t want to not report. That’s even worse. But it feels like shouting into a void. There’s no transparency or accountability.

Jaclyn Friedman, writer and founder, Women, Action & the Media1Jaclyn Friedman, interview with PEN America, May 28, 2020; Viktorya Vilk, Elodie Vialle, and Matt Bailey, “No Excuse for Abuse,” PEN America, March 2021, pen.org/report/no-excuse-for-abuse.

Reporting is the only recourse that we have when abuse happens. It’s a form of accountability…but when people constantly feel like they are wasting their time, they are just going to stop reporting.

Azmina Dhrodia, expert on gender, technology and human rights and former senior policy manager, World Wide Web Foundation2Azmina Dhrodia, interview by Kat Lo, Meedan, October 8, 2021.

The experience of using reporting systems produces further feelings of helplessness… Rather than giving people a sense of agency, it compounds the problem.

Claudia Lo, senior design and moderation researcher, Wikimedia3Claudia Lo, interview by Kat Lo, Meedan, October 15, 2021.

Online abuse is a massive problem.4PEN America defines online abuse or harassment as the “pervasive or severe targeting of an individual or group online through harmful behavior.” “Defining ‘Online Abuse’: A Glossary of Terms,” PEN America, accessed January 2021, onlineharassmentfieldmanual.pen.org/defining-online-harassment-a-glossary-of-terms According to a 2021 study from the Pew Research Center, nearly half of adults in the U.S. have personally experienced online harassment. The rate of severe harassment—including stalking and sexual harassment—has significantly increased in recent years.5Emily A. Vogels, “The State of Online Harassment,” Pew Research Center, January 13, 2021, pewresearch.org/internet/2021/01/13/the-state-of-online-harassment

For journalists, writers, and creators who rely on having an online presence to make a living and make their voices heard, the situation is even worse—especially if they belong to groups already marginalized for their actual or perceived identity. In a 2020 global study of women journalists from UNESCO and the International Center for Journalists, 73 percent of respondents said they experienced online abuse. Twenty percent reported that they had been attacked or abused offline in connection with online abuse. Women journalists from diverse racial and ethnic groups cited their identity as the reason they were disproportionately targeted online.6Julie Posetti et al., “Online Violence Against Women Journalists: A Global Snapshot of Incidence and Impacts,” UNESCO, 2020, unesdoc.unesco.org/ark:/48223/pf0000375136/PDF/375136eng.pdf.multi According to Amnesty International’s 2018 report, Toxic Twitter: A Toxic Place for Women, Black women were “84 percent more likely than white women to be mentioned in abusive or problematic tweets.”7“Crowdsourced Twitter study reveals shocking scale of online abuse against women,” Amnesty International, December 18, 2018, amnesty.org/en/latest/press-release/2018/12/crowdsourced-twitter-study-reveals-shocking-scale-of-online-abuse-against-women/

Being inundated with hateful slurs, death threats, sexual harassment, and doxing can have dire consequences. On an individual level, online abuse places an enormous strain on mental and physical health. On a systemic level, when creative and media professionals are targeted for what they write and create, it chills free expression and stifles press freedom, deterring participation in public discourse.8Julie Posetti et al., “Online Violence Against Women Journalists: A Global Snapshot of Incidence and Impacts,” UNESCO, 2020, unesdoc.unesco.org/ark:/48223/pf0000375136/PDF/375136eng.pdf.multi; Michelle Ferrier, “Attacks and Harassment: The Impact on Female Journalists and Their Reporting,” TrollBusters and International Women’s Media Foundation, September 13, 2018, iwmf.org/wp-content/uploads/2018/09/Attacks-and-Harassment.pdf; “Toxic Twitter: The Psychological Harms of Violence and Abuse Against Women Online” (Ch. 6), Amnesty International, March 2018, amnesty.org/en/latest/news/2018/03/online-violence-against-women-chapter-6-6; Avery E. Holton et al., “‘Not Their Fault, but Their Problem”: Organizational Responses to the Online Harassment of Journalists,” Journalism Practice 17, no. 4 (July 5, 2021): 859-874, doi.org/10.1080/17512786.2021.1946417; Kurt Thomas et al., “‘It’s common and a part of being a content creator’”: Understanding How Creators Experience and Cope with Hate and Harassment Online,” CHI Conference on Human Factors in Computing Systems, April 29–May 5, 2022, storage.googleapis.com/pub-tools-public-publication-data/pdf/ddef9ee78915e1b7ea9d04cba3056919c65fea86.pdf Online abuse is often deployed to stifle dissent. Governments and political parties are increasingly using online attacks, alongside physical attacks and trumped-up legal charges, to intimidate and undermine critical voices, including those of journalists and writers.9Julie Posetti et al., “Maria Ressa: Fighting an Onslaught of Online Violence: A big data analysis,” International Center for Journalists, March 2021, icfj.org/our-work/maria-ressa-big-data-analysis; Amna Nawaz and Zeba Warsi, “Journalist and Critic of Indian Government Faces Sham Charges Designed to Silence Her,” PBS NewsHour, January 6, 2023, pbs.org/newshour/show/journalist-and-critic-of-indian-government-faces-sham-charges-designed-to-silence-her; Gerntholz et al., “Freedom to Write Index 2022,” PEN America, April 27, 2023, https://pen.org/report/freedom-to-write-index-2022/; Jennifer Dunham, “Deadly year for journalists as killings rose sharply in 2022,” Committee to Protect Journalists, January 24, 2023, https://cpj.org/reports/2023/01/deadly-year-for-journalists-as-killings-rose-sharply-in-2022/

The technology companies that run social media platforms, where so much of online abuse plays out, are failing to protect and support their users. When the Pew Research Center asked people in the U.S. how well social media companies were doing in addressing online harassment on their platforms, nearly 80 percent said that companies were doing “an only fair or poor job.”10Emily Vogels, “A majority say social media companies are doing an only fair or poor job addressing online harassment,” Pew Research Center, January 13, 2021, pewresearch.org/internet/pi_2021-01-13_online-harrasment_0-08a According to a 2021 study of online hate and harassment conducted by the Anti-Defamation League and YouGov, 78 percent of Americans specifically want companies to make it easier to report hateful content and behavior, up from 67 percent in 2019.11“Online Hate and Harassment: The American Experience 2021,” Anti-Defamation League, March 22, 2021, adl.org/resources/report/online-hate-and-harassment-american-experience-2021

Finding product and policy solutions that counter the negative impacts of online abuse without infringing on free expression is challenging, but it’s also doable—with time, resources, and will. In a 2021 report, No Excuse for Abuse, PEN America outlined a series of recommendations that social media platforms could enact to reduce risk, minimize exposure, facilitate response, and deter abusive behavior, while maintaining the space for free and open dialogue. In doing that research, it became clear that the mechanisms for reporting abusive and threatening content to social media platforms were deeply flawed.12Viktorya Vilk, Elodie Vialle, and Matt Bailey, “No Excuse for Abuse,” PEN America, March 2021, pen.org/report/no-excuse-for-abuse In this follow-up report, we set out to understand how and why.

On most social media platforms, people can “report” to the company that a piece of content—or an entire account—is violating policies. When a user chooses to report abusive content or accounts, they typically initiate a “reporting flow,” a series of steps they follow to indicate how the content or account violates platform policies. In response, a platform may remove the reported content or account, use other moderation interventions (such as downranking content, issuing a warning, etc.), or take no action at all, depending on the company’s assessment of whether the reported content or account is violative.

For users, reporting content that violates platform policies is one of the primary means of defending themselves, protecting their community, and seeking accountability. For platforms, reporting is a critical part of the larger content moderation process.

To identify abusive content, social media companies use a combination of proactive detection via automation and human moderation and reactive detection via user reporting, which is then adjudicated by automated systems or human moderators. The pandemic accelerated platforms’ increasing reliance on automation, including the algorithmic detection of harmful language. While automated systems help companies operate at scale and lower costs, they are highly imperfect.13João Carlos Magalhães and Christian Katzenbach, “Coronavirus and the frailness of platform governance,” Internet Policy Review 9, (March 2020): ssoar.info/ssoar/bitstream/handle/document/68143/ssoar-2020-magalhaes_et_al-Coronavirus_and_the_frailness_of.pdf?sequence=1&isAllowed=y&lnkname=ssoar-2020-magalhaes_et_al-Coronavirus_and_the_frailness_of.pdf; Tarleton Gillespie, “Content moderation, AI, and the question of scale,” Big Data & Society 7, no. 2 (July–December 2020): 1–5, doi.org/10.1177/2053951720943234; Robert Gorwa et al., “Algorithmic content moderation: Technical and political challenges in the automation of platform governance,” Big Data & Society 7, no. 1 (January–June 2020): doi.org/10.1177/2053951719897945; Carey Shenkman et al., “Do You See What I See? Capabilities and Limits of Automated Multimedia Content Analysis,” Center for Democracy & Technology, May 2021, cdt.org/wp-content/uploads/2021/05/2021-05-18-Do-You-See-What-I-See-Capabilities-Limits-of-Automated-Multimedia-Content-Analysis-Full-Report-2033-FINAL.pdf

Human moderators are better equipped to take the nuances of language, as well as cultural and sociopolitical context, into account. Relying on human moderation to detect abusive content, however, comes with its own challenges, including scalability, implicit bias, and fluency and cultural competency across languages. Moreover, many human moderators—the majority of whom are located in the Global South—are economically exploited and traumatized by the work.14Andrew Arsht and Daniel Etcovitch, ”The Human Cost of Online Content Moderation,” Harvard Journal of Law & Technology JOLT Digest, March 2, 2018, law.harvard.edu/digest/the-human-cost-of-online-content-moderation; Billy Perrigo, “Inside Facebook’s African Sweatshop,” TIME, February 14, 2022, time.com/6147458/facebook-africa-content-moderation-employee-treatment/; Bobby Allen, “Former TikTok moderators sue over emotional toll of ‘extremely disturbing’ videos,” NPR, March 24, 2023, https://www.npr.org/2022/03/24/1088343332/tiktok-lawsuit-content-moderators

Because proactive detection of online abuse, both human and automated, is highly imperfect, reactive user reporting remains a critical part of the larger content moderation process. More effective user reporting, in turn, can also provide the data necessary to better train automated systems. The problem is that when reporting mechanisms do not work properly, that undermines the entire content moderation process, which significantly impedes the ability of social media companies to fulfill their duty of care to protect their users and facilitate the open exchange of ideas.

A poorly functioning moderation process threatens free expression in myriad ways. Content moderation interventions that remove or reduce the reach of user content can undermine free expression, especially when weaponized or abused.15Jodie Ginsberg, “Social Media Bans Don’t Just Hurt Those You Disagree With—Free Speech Is Damaged When the Axe Falls Too Freely,” The Independent, May 17, 2019, independent.co.uk/voices/free-speech-social-media-alex-jones-donald-trump-facebook-twitter-bans-a8918401.html; Queenie Wong, “Is Facebook censoring conservatives or is moderating just too hard?,” CNET, October 29, 2019, cnet.com/tech/tech-industry/features/is-facebook-censoring-conservatives-or-is-moderating-just-too-hard At the same time, harassing accounts that are allowed to operate with impunity can chill the expression of the individuals or communities they target.16“The Chilling: A Global Study On Online Violence Against Women Journalists,” International Center for Journalists, November 2, 2022, icfj.org/our-work/chilling-global-study-online-violence-against-women-journalists, Caroline Sinders, Vandinika Shukla, and Elyse Voegeli. “Trust Through Trickery,” Commonplace, PubPub, January 5, 2021, doi.org/10.21428/6ffd8432.af33f9c9

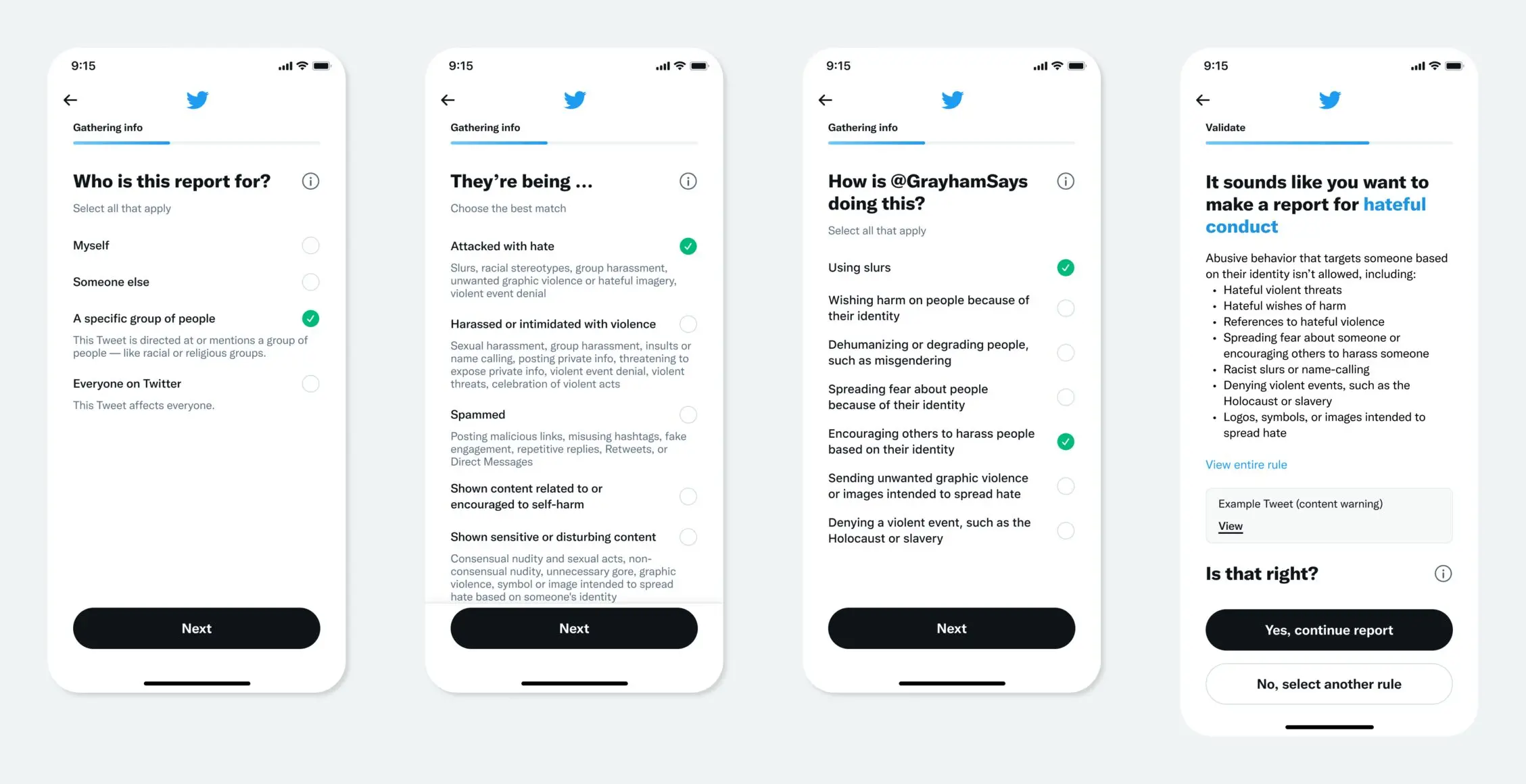

In our research, we found that reporting mechanisms on social media platforms are often profoundly confusing, time-consuming, frustrating, and disappointing. Users frequently do not understand how reporting actually works, including where they are in the process, what to expect after they submit a report, and who will see their report. Additionally, users often do not know if, or why, a decision has been reached regarding their report. They are consistently confused about how platforms define specific harmful tactics and therefore struggle to figure out if a piece of content is violative. Few reporting systems currently take into account coordinated or repeated harassment, leaving users with no choice but to report dozens or even hundreds of abusive comments and messages piecemeal.

On the one hand, the reporting process takes many steps and can feel unduly laborious; on the other, there is rarely the opportunity to provide context or explain why a user may find something abusive. Few platforms offer any kind of accessible or consistent documentation feature, which would allow users to save evidence of online abuse even if it has been deemed abusive and removed. And fewer still enable users to ask their allies for help with reporting, which makes it more difficult to reduce exposure to abuse.

When the reporting process is confusing, users make mistakes. When the reporting process does not leave any room for the addition of context, moderators may lack the information they need to decide whether content is violative. It’s a lose-lose situation—except perhaps for abusive trolls.

For this report, nonprofit organizations PEN America and Meedan joined forces to understand why reporting mechanisms on platforms are often so difficult and frustrating to use, and how they can be improved in concrete, actionable ways. Informed by interviews with nearly two dozen writers, journalists, creators, technologists, and civil society experts, as well as extensive analysis of existing reporting flows on major platforms (Facebook, Instagram, YouTube, Twitter, and TikTok), this report maps out concrete, actionable recommendations for how social media companies can make the reporting process more user-friendly, more effective, and less harmful.

While we discuss the policy implications of our research, our primary goal is to highlight how platform design fails to make existing policies effective in practice. We recognize that reporting mechanisms are only one aspect of content moderation, and changes to reporting mechanisms alone are not sufficient to mitigate the harms of online abuse. Comprehensive platform policies, consistent and transparent policy enforcement, and sophisticated user-centered features are central to more effectively addressing online abuse and protecting users. And yet reporting remains the first line of defense for millions of users worldwide facing online harassment. If social media platforms fail to revamp reporting, as well as put more holistic protections in place, then public discourse in online spaces will remain less inclusive, less equitable, and less free.

Create a Dashboard for Tracking Reports, Outcomes, and History

Challenges

Reporting online abuse to tech companies takes time and energy and can amplify the stress, anxiety, and fear of the person experiencing the abuse. Once a person has reported abuse, it is imperative that they receive regular communication and have transparency about the content moderation process, so that they can track outcomes and understand why decisions were made.

After sending a report on Instagram you would get a message saying ‘we’re going to review this,’ but there was no indication of how long the review is going to take or whether they would tell us their decision. When the account got suspended, we found out by going back to the harasser’s page I reported.

Sarah Fathallah, senior research fellow at the research lab Think of Us

At present, however, users often express a sense that they are reporting into a void. In many cases, there is no indication if a content moderation decision has been reached or why.17Sophia Smith Galer, interview by Kat Lo, Meedan, December 20, 2021; Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021; Nathan Grayson, interview by Kat Lo, Meedan, October 26, 2021; Elizabeth Ballou, interview by Kat Lo, Meedan, November 30, 2021; Viktorya Vilk, Elodie Vialle, and Matt Bailey, “No Excuse for Abuse,” PEN America, March 2021, pen.org/report/no-excuse-for-abuse On some platforms, such as Twitter and Twitch, there isn’t even a way to see filed reports once they have been submitted.18Leigh Honeywell, interview by Kat Lo, Meedan, October 25, 2021. Sarah Fathallah, senior research fellow at the research lab Think of Us, said, “After sending a report on Instagram you would get a message saying ‘we’re going to review this,’ but there was no indication of how long the review is going to take or whether they would tell us their decision. When the account got suspended, we found out by going back to the harasser’s page I reported.19Sarah Fathallah, interview by Kat Lo, Meedan, November 18, 2021.

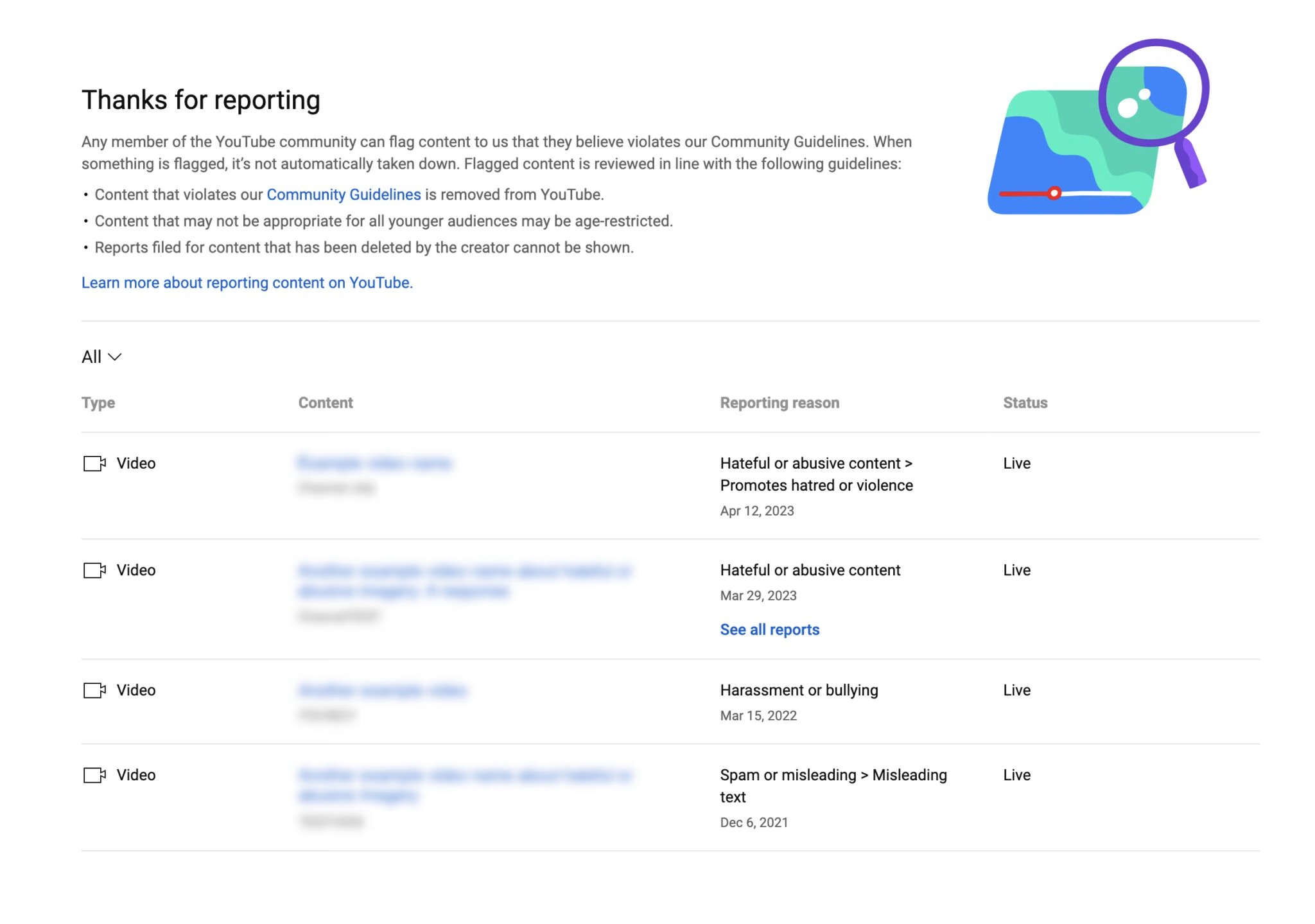

Most platforms do offer some communication about the reporting process and some even have basic inboxes with records of and updates about reports, but the communication is often limited and sporadic and the inboxes can be exceedingly difficult to find and navigate. For example, Instagram and Facebook provide basic inboxes to store reports (see case study below), but users must dig around to find them. TikTok also has an inbox and users are informed about it when they report content, but this inbox is otherwise difficult to find and only accessible via the mobile app. Twitter does not have an inbox or dashboard for reports; instead it sends sporadic updates via the notification feed and users’ emails.

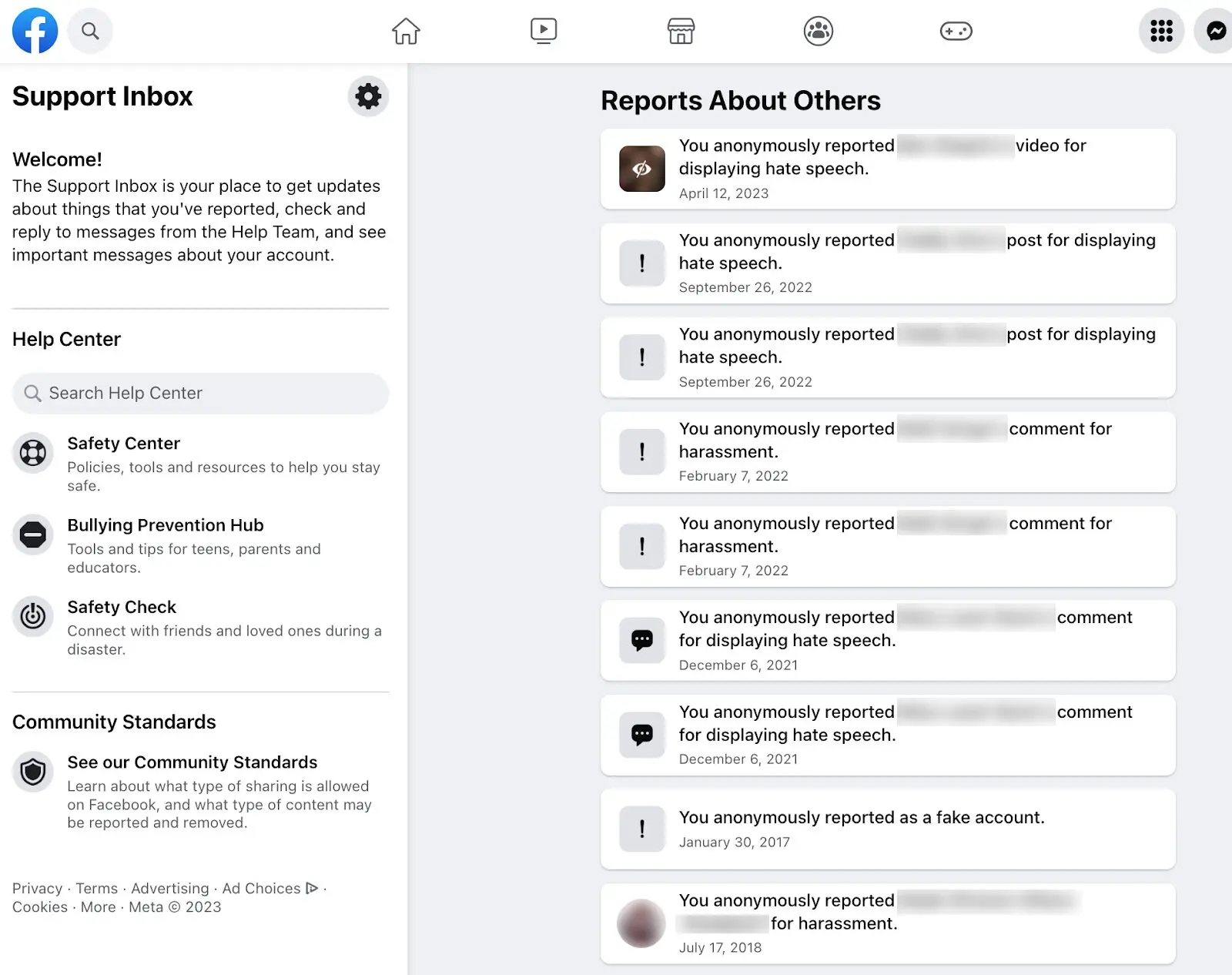

YouTube comes the closest to offering a dashboard for individual users, via its “Report history” section, which is relatively accessible on a desktop, but does not seem to work on mobile. This dashboard allows users to track which videos they have reported, but does not include reported comments, does not indicate where a report is in the review process, and does not include all of the information a user has submitted in the report. Tellingly, it’s actually YouTube’s copyright dashboard, rather than its reporting history dashboard, that provides the kind of information, usability, and accessibility that should exist across all platforms for tracking reports of hate and harassment (for more, see case study below).

Recommendations

- Create a dashboard, at the account level, that enables an individual user to track the reports they have personally submitted, the status of each report within the content moderation process, and the outcome for each report. If the user disagrees with the content moderation decision reached in response to the content or account they reported, they should be able to appeal from within the dashboard. This dashboard

- should include reports for all content types, including user profiles, posts, replies, comments, and direct messages;

- should include all key information relevant to reporting, in one place, including the current estimated or average processing times for reviewing reports and whether a human or a bot reviewed the report; and

- could include reports made on behalf of the individual user by authorized third parties (see section 7).

- Ensure the dashboard is easily accessible and user-friendly. The reporting dashboard needs to be readily visible and easily accessible from the user settings menu and available across mobile and desktop. And it needs to be easy to use. Platforms should educate users about the existence of the dashboard through prompts at the end of the reporting flow.

- Design the dashboard through an identity-aware and trauma-informed lens. Accessible dashboards and notifications are valuable tools, but they could also increase users’ exposure to abusive content. This can be mitigated by employing principles of trauma-informed design, which center on providing users with greater control over when and how they view abusive content.20Janet X. Chen et al., “Trauma-Informed Computing: Towards Safer Technology Experiences for All,” CHI ’22: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, no. 544 (April 2022): 1–20, doi.org/10.1145/3491102.3517475 To do this, platforms could minimize visibility of the harmful content within the dashboard itself by hiding it and giving users the option to “click to view”; allow users to sort, hide, and archive reports from the dashboard; allow users to indicate whether they want to see the harmful content again when they receive updates about the status of their reports; and/or provide users with the option to receive updates only within the dashboard rather than via emails or pop-up notifications.

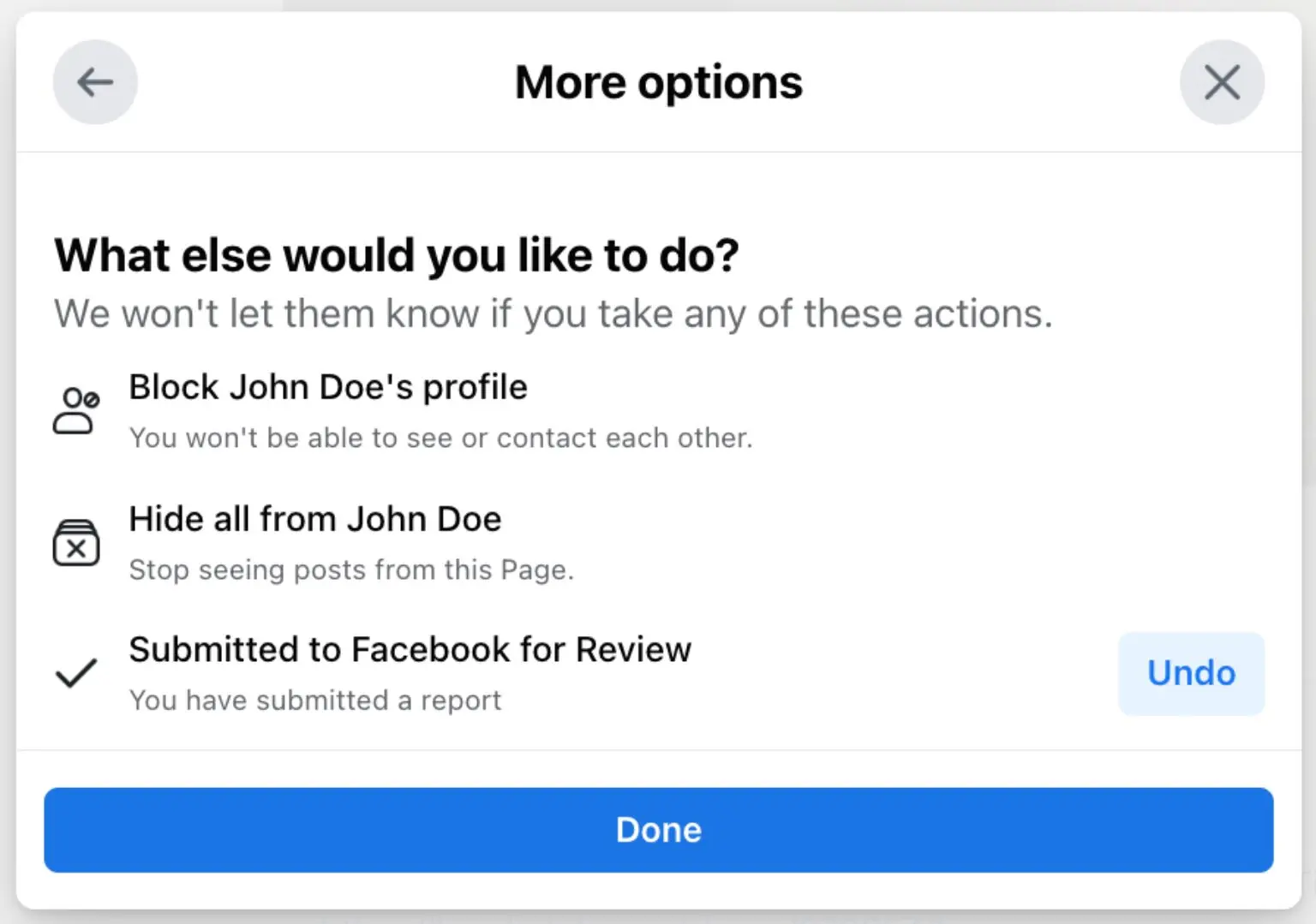

Product case study: Facebook’s Support Inbox for reporting

For Facebook, Meta has developed a “Support Inbox” that centralizes all of the content a user has reported as violative. The Support Inbox provides several useful features, including the ability to delete the report from the inbox and block the abusive user. In our tests, users were occasionally offered the option to request additional review, but we found this feature to be highly inconsistent and unpredictable. Moreover, users were often not given access within the inbox to the content of the post that they reported. Multiple individuals we interviewed could not actually find the inbox or were not even aware that it existed.21Natalie Wynn, interview by Kat Lo, Meedan, December 17, 2021; Corry Will, interview by Kat Lo, Meedan, December 14, 2021; Azmina Dhrodia, interview by Kat Lo, Meedan, October 8, 2021; Elizabeth Ballou, interview by Kat Lo, Meedan, November 30, 2021. And there were significant issues with keeping users updated, in a clear and timely manner, about the status of their reports. In a formal response, Meta assured us that they “always inform the user we’ve received their report or feedback and follow up accordingly” and inform users “about the final outcome of their reports through a message in their Support Inbox.”22Email response from Meta spokesperson, March 17, 2023. However, we extensively tested Facebook’s reporting flows and found that the outcomes of reports were presented inconsistently and were sometimes not updated more than a year after the report was submitted, or simply showed error messages.

Product case study: YouTube’s copyright management tool as a model for a reporting dashboard

It is the dashboard for YouTube’s copyright management tool, rather than the platform’s actual reporting system, that provides a model for how platforms can build an effective dashboard for reporting abusive content. If a YouTube user discovers there is unauthorized use of their content on the platform, they can deploy the Copyright Match Tool to identify and protect their intellectual property.23“Use the Copyright Match Tool,” Google, accessed March 2023, support.google.com/youtube/answer/7648743 This tool provides a centralized dashboard from which users can request removal or monetization of their copyrighted content, including content that has been automatically detected by YouTube’s Content ID system.24“How Content ID works” Google, accessed March 2023, support.google.com/youtube/answer/2797370?hl=en The screenshot below shows this user-friendly and responsive dashboard, which includes key information relevant for reporting, such as type of media; title, description, or preview of the content; description of the relevant violation; and the status of the request. An important aspect of this dashboard interface is that the user can see key information across all of the items at one time in a way that is not overwhelming. It is precisely this kind of centralization, usability, and accessibility that should exist on YouTube itself and across all platforms to help users track their reports of hate and harassment.

Give Users Greater Clarity and Control as They Report Abuse

Challenges

While it is critically important for users to understand what to expect after they have reported abuse, it is equally important that they understand what to expect during the process of reporting abuse. If the actual reporting mechanism is unpredictable and unclear, that can exacerbate the feelings of stress and powerlessness often caused by the abuse itself. Users need to understand what steps they will need to take, where they are in the reporting flow, whether they will be given the opportunity to add context, and who will see their report once they submit it. For many platforms, this is not currently the case.

During walk-throughs and interviews with our research team, multiple participants found reporting mechanisms deeply confusing; two were actually caught by surprise when their reports were submitted before they even realized they had reached the end of the reporting flow.25Elizabeth Ballou, interview by Kat Lo, Meedan, November 30, 2021; Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021; Azmina Dhrodia, interview by Kat Lo, Meedan, October 8, 2021; Jaclyn Friedman, interview with PEN America, May 28, 2020; Nathan Grayson, interview by Kat Lo, Meedan, October 26, 2021; Elisa Hansen, interview by Kat Lo, Meedan, January 4, 2022; Leigh Honeywell, interview by Kat Lo, Meedan, October 25, 2021; Mikki Kendall, interview by Kat Lo, Meedan, December 17, 2021; Claudia Lo, interview by Kat Lo, Meedan, October 15, 2021; Corry Will, interview by Kat Lo, Meedan, December 14, 2021. For example, as one interviewee was attempting to report abuse to Twitter (before the revamp of its reporting process described below), she was looking for a way to add context and additional tweets, when she suddenly discovered that the report had already been submitted without any of the information she intended to include.26Elizabeth Ballou, interview by Kat Lo, Meedan, November 30, 2021.

If users do not know who will ultimately be notified of their report, they can be less likely to report abusive content even on behalf of someone else, because they fear being negatively perceived by others.27Sai Wang, “Standing up or standing by: Bystander intervention in cyberbullying on social media,” New Media & Society 23(6), 1379–1397, January 29, 2020, doi.org/10.1177/1461444820902541 In a 2020 survey of minors who chose not to seek help when confronted with potentially harmful experiences, 24 percent were worried their report would not be anonymous, despite feeling confident in their ability to use platforms’ reporting tools effectively.28“Responding to Online Threats: Minors’ Perspectives on Disclosing, Reporting, and Blocking: Findings from 2020 Quantitative Research among 9–17 Year Olds,” Thorn, 2021, info.thorn.org/hubfs/Research/Responding%20to%20Online%20Threats_2021-Full-Report.pdf While the studies we cite were focused on youth, we found parallels in our own research with writers, journalists, and creators. And users have every reason to be concerned. In one egregious example, Renée DiResta, research manager at the Stanford Internet Observatory, filed a copyright-based takedown request to stop the use of her photographs of her baby as part of a coordinated harassment campaign; Twitter not only alerted her harassers, but shared her private information, thereby exposing DiResta to further abuse and safety risks.29Renée DiResta, “How to Not Protect Your Users,” Medium, June 8, 2016, medium.com/@noupside/how-to-not-protect-your-users-77d87871d716

For users to feel safe reporting, they need to know if the harasser will be notified that their content has been reported and if the harasser will be notified about the identity of the person who reported their content. Even when platforms provide assurances of anonymity in their help centers, this information is not clearly stated within the reporting flow itself, where users actually need to see it in real time in order to feel comfortable proceeding.

And finally, if users discover that reporting abuse reduces their ability to mitigate that content in other ways, they may choose not to report at all. For example, interviewee Corry Will, an educational science influencer, demonstrated to us in real time that when he attempted to report an abusive comment on Instagram, that comment then automatically disappeared from his view, preventing him from taking any further action on it, such as deleting it or blocking the abusive account; yet everyone else could still see and interact with the abusive comment. In other words, the reporting process effectively prevented him from further protecting himself.30Corry Will, interview by Kat Lo, Meedan, December 14, 2021. By contrast, Twitter hides reported comments under a click-through that says “You reported this tweet,” but with the option to view and act on the previously reported content.31Nick Statt, “Twitter will soon indicate when a reported tweet was taken down,” The Verge, October 17, 2018, theverge.com/2018/10/17/17990154/twitter-reported-tweets-public-notice-anti-harassment-feature In a 2021 survey of creators across social media platforms who had experienced hate and harassment, creators similarly expressed confusion about the trade-offs between reporting and taking other moderation actions, including removing the offending comment, to mitigate harm as quickly as possible.32Kurt Thomas et al., “‘It’s common and a part of being a content creator’”: Understanding How Creators Experience and Cope with Hate and Harassment Online,” CHI Conference on Human Factors in Computing Systems, April 29–May 5, 2022, storage.googleapis.com/pub-tools-public-publication-data/pdf/ddef9ee78915e1b7ea9d04cba3056919c65fea86.pdf

Recommendations

- Ensure users understand how the reporting flow works, including what steps they will need to take, whether they will be given the opportunity to add information, who will see the report once they submit it, who will be notified that they reported abuse, if they should expect a platform response and within what timeframe they should expect that response, how they will be notified, and if there’s an appeals process.

- Provide a progress bar that helps users understand where they are within the reporting flow, in real time, as they complete the process.

- Make it clear to users when their reports are about to be submitted and allow users to review their reports before they are submitted. At the end of the reporting flow, show the user the information that they are submitting—including the categories they selected and any contextual information that they provided—before the user clicks on the button to submit the report. And include a “back” button so that the user can make edits to their report without having to restart it.

- Ensure that reporting does not impede or reduce a user’s ability to act on abusive content in other ways, including by blocking, muting, restricting, or documenting the content or the account engaging in harassment.

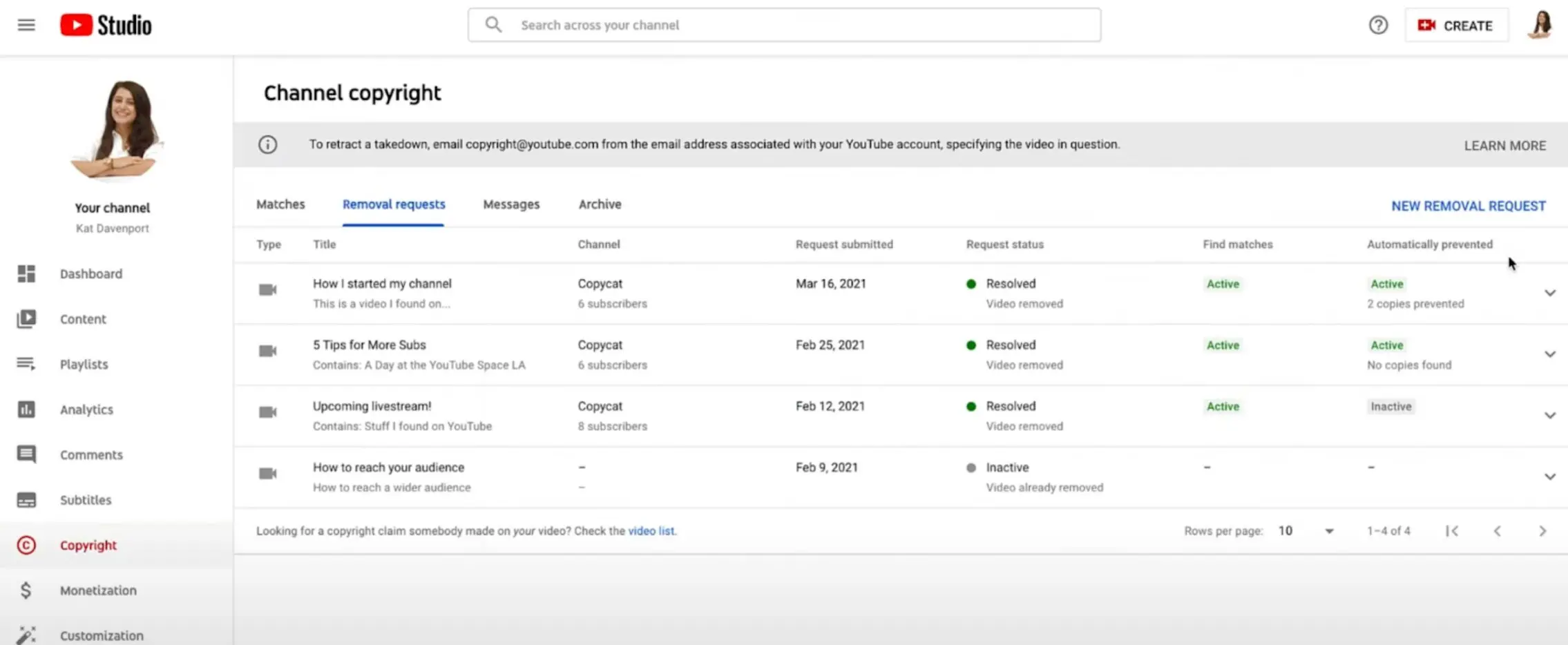

Product case study: Twitter’s revamped reporting process

In June 2022, Twitter released a substantively revamped user experience for reporting harmful content,33“Twitter’s New Reporting Process Centers on a Human-First Design,” Twitter, December 7, 2021, blog.twitter.com/common-thread/en/topics/stories/2021/twitters-new-reporting-process-centers-on-a-human-first-design which has many features that align with this report’s recommendations. Unfortunately, since Elon Musk purchased the platform in 2022 and gutted its Trust and Safety teams, it is unclear whether this revamped reporting process and the policies it aims to enforce will be kept in place. Moreover, under Musk’s management, Twitter’s larger content moderation process, of which reporting is just one critical component, has become significantly less effective in implementing platform policies, leading to an influx of hate and harassment on the platform.34Sheera Frenkel and Kate Conger, “Hate Speech’s Rise on Twitter Is Unprecedented, Researchers Find,” The New York Times, December 2, 2022, nytimes.com/2022/12/02/technology/twitter-hate-speech.html That said, the improvements to Twitter’s reporting system from 2022 are worth highlighting, including:

- Allowing users to indicate how they believe the content policy that they selected is being violated, including the ability to provide additional context.

- Allowing users facing identity-based attacks to indicate what aspect of their identity is being targeted.

- Suggesting the category of abuse based on the answers the user provided, with a detailed description and example of what that category entails.

- Allowing the user to choose different categories of abuse if they disagree with the category that Twitter proactively suggested.

- Allowing users to report multiple abusive tweets from a single account.

- Streamlining additional steps by condensing the “Add other tweets” and “Add additional context” options into a menu on a single screen.

- Providing an overview of the report for users to review before submitting it.

- Informing the user what will happen next, including an estimate of how long the content moderation process will take.

Align Reporting Mechanisms with Platform Policies

Challenges

Platforms are effectively asking users to only report content that violates their policies. In order to do that, however, users need to understand what platforms do—and do not—consider violative. The problem is that users are often confused about how platforms define specific types of harm and harmful tactics, which in turn undermines reporting and the larger content moderation process.35Natalie Wynn, interview by Kat Lo, Meedan, December 17, 2021; Elisa Hansen, interview by Kat Lo, Meedan, January 4, 2022; Viktorya Vilk, Elodie Vialle, and Matt Bailey, “No Excuse for Abuse,” PEN America, March 2021, pen.org/report/no-excuse-for-abuse; Caroline Sinders, Vandinika Shukla, and Elyse Voegeli. “Trust Through Trickery,” Commonplace, PubPub, January 5, 2021, doi.org/10.21428/6ffd8432.af33f9c9

If there is a comment calling a trans woman a man, is that hate speech or is it harassment? I don’t know. I kind of don’t know what to click and so I don’t do it, and just block.

Natalie Wynn, creator of the YouTube channel ContraPoints

Users who are reporting abuse experience confusion and anxiety about deciding which reporting category is “best” to select so that the abusive content is evaluated under the appropriate policy. Mikki Kendall, an author and diversity consultant who writes about race, feminism, and police violence, points out that some platforms that say they prohibit “hate speech” provide “no examples and no clarity on what counts as hate speech.”36Mikki Kendall, interview by Kat Lo, Meedan, December 17, 2021. Natalie Wynn, creator of the YouTube channel ContraPoints, explained: “If there is a comment calling a trans woman a man, is that hate speech or is it harassment? I don’t know. I kind of don’t know what to click and so I don’t do it, and just block.”37Natalie Wynn, interview by Kat Lo, Meedan, December 17, 2021. A number of interviewees thought that “bullying and harassment” required multiple persistent actions from one or more individuals and were hesitant to report individual pieces of abusive content, when in fact individual pieces of content can also qualify as harassment under most platform policies.38Elisa Hansen, interview by Kat Lo, Meedan, January 4, 2022; Jareen Imam, interview by Kat Lo, Meedan, October 29, 2021; Claudia Lo, interview by Kat Lo, Meedan, October 15, 2021; Corry Will, interview by Kat Lo, Meedan, December 14, 2021. In other words, confusion about how platforms define abuse-related terms and tactics can discourage users from reporting abuse altogether.39Nathan Grayson, interview by Kat Lo, Meedan, October 26, 2021; Aaron Smith and Maeve Duggan, “Crossing the Line: What Counts as Online Harassment?,” Pew Research Center, January 4, 2018, pewresearch.org/internet/2018/01/04/crossing-the-line-what-counts-as-online-harassment; “Universal Code of Conduct/2021 Consultations/Research,” Wikimedia Meta-Wiki, June 10, 2021, meta.wikimedia.org/wiki/Universal_Code_of_Conduct/2021_consultations/Research

To complicate matters, the content that users want to report often violates multiple categories. If users are unable to select all of the relevant categories, they are forced to choose. Leigh Honeywell, founder and CEO of the personal cybersecurity and anti-abuse start-up Tall Poppy, describes the dilemma users often face across multiple platforms: “You can report something as abuse targeting a specific group or you can report it as threatening. It’s up to the user to figure out which of those is going to be more effective. From what I’ve seen, it’s probably the one reporting a threat, but people don’t necessarily know that—and just reporting it as threatening without also flagging the hate aspect of it erases an important part of what makes the threat serious.”40Leigh Honeywell, interview by Kat Lo, Meedan, October 25, 2021.

Several interviewees mentioned that they had concerns as to whether the category they selected could negatively impact platform response.41Jaclyn Friedman, interview with PEN America, May 28, 2020; Sarah Fathallah, interview by Kat Lo, Meedan, November 18, 2021; Nathan Grayson, interview by Kat Lo, Meedan, October 26, 2021; Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021; Caroline Sinders, interview by Kat Lo, Meedan, November 8, 2021. According to TikTok and Meta, content reported as abusive or harmful is reviewed against all platform policies, regardless of which category users select in reporting, though we were unable to independently verify this.42Email response from Meta spokesperson, March 17, 2023; Email response from TikTok spokesperson, February 7, 2023. If that is the case, users clearly don’t know it.

In a survey of the abuse-reporting tools provided by a range of online service providers, researchers at the Stanford Internet Observatory found that providers do not consistently enable users to report all of the various types of abuse that they may encounter.43Riana Pfefferkorn, “Content-Oblivious Trust and Safety Techniques: Results from a Survey of Online Service Providers,” Journal of Online Trust & Safety 1, no. 2 (2022), doi.org/10.54501/jots.v1i2.14 Kristiana de Leon, an activist and city council member in Washington state, found that in some reporting flows on Facebook she was given the option to select multiple categories. In other reporting flows on the same platform, however, she was only given the ability to select one category. When she was unable to select multiple categories in a report, she often reported a single post multiple times under different categories because she felt the post violated multiple policies. But she worried that making multiple reports for the same post could be counterproductive. “Sometimes I would get a note back saying we received your report, but sometimes I only get one, even though I reported maybe four categories,” Kristiana explained. “Is there some sort of hierarchy I’m not aware of? Or maybe it nullified my first one that I thought was the most important.”44Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021.

One participant expressed concern about whether there could be consequences or penalties for reporting “incorrectly” because they had not properly interpreted what counts as violative. Elizabeth Ballou, writer and narrative designer at Deck Nine Games, who has reported people on Twitter involved in harassment campaigns against marginalized game developers and journalists, expressed a concern about being flagged as an unreliable reporter: “The number of notifications I got from Twitter saying that they had actually banned an account or suspended an account or deleted a tweet were really dropping off and I wonder if Twitter has flagged my account for reporting too many things. Now they see me as a low-quality reporter, maybe they even think that I’m a spammer.”45Elizabeth Ballou, interview by Kat Lo, Meedan, November 30, 2021.

Recommendations

- Ensure that the terms and definitions for harassing and hateful tactics provided in platform policies are clear and closely align with those used in reporting mechanisms. Platform policies need to specify which kinds of tactics are violative, provide definitions with illustrative examples, and use those same terms and definitions within reporting mechanisms.

- Ensure that platform policies are easily accessible during the reporting process itself. Users should never have to leave a reporting flow in order to check policies. Platforms should use pop-ups and other methods that are easily navigable, similar to customer support interfaces.

- Give users the ability to select multiple categories of harm and indicate which tactics are being deployed. For example, allow a user to select both “harassment” and “hate speech,” and then allow the user to indicate specific tactics, such as “slurs” and/or “violent threats.”

Offer Users Two Reporting Options: Expedited and Comprehensive

Challenges

When it comes to reporting abusive content, sometimes users have different—and occasionally competing—needs. In some situations, users want the reporting process to be quicker and easier, especially if they are experiencing a high volume of abuse.46Sophia Smith Galer, interview by Kat Lo, Meedan, December 20, 2021; Nathan Grayson, interview by Kat Lo, Meedan, October 26, 2021; Natalie Wynn, interview by Kat Lo, Meedan, December 17, 2021. Among the users we interviewed, some opted to block, restrict, or mute abusive accounts, rather than report them—even when they felt the content clearly violated platform policies—because they found the reporting process so cumbersome. Corry Will noticed himself doing this: “When a major amount of harassment started happening, I basically just stopped reporting because it was far less effective than just restricting the accounts.”47Corry Will, interview by Kat Lo, Meedan, December 14, 2021. One journalist we interviewed, who requested anonymity, found the blocking feature more convenient than going through the reporting process because it was not only more empowering, but much faster.48Anonymous interview by Azza El-Masri, University of Texas at Austin, July 4, 2021. Users can often block abusive accounts in just one click, whereas reporting takes many clicks. As Claudia Lo, a senior design and moderation researcher at Wikimedia, pointed out, it can take up to six clicks to report abuse on Facebook, “by which time I have probably forgotten what I was trying to report.”49Claudia Lo, interview by Kat Lo, Meedan, October 15, 2021.

When a major amount of harassment started happening, I basically just stopped reporting because it was far less effective than just restricting the accounts.

Corry Will, educational science influencer

In other situations, users actually want a more comprehensive reporting process that provides room for context, especially if they are being harassed across multiple platforms or in combination with offline threats or abuse, or if the abuse is coded or otherwise requires additional information to understand.50Nitesh Goyal et al., “‘You have to prove the threat is real’: Understanding the Needs of Female Journalists and Activists to Document and Report Online Harassment,” CHI ’22: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, no. 242 (April 2022): 1–17, doi.org/10.1145/3491102.3517517; Aaron Smith and Maeve Duggan, “Crossing the Line: What Counts as Online Harassment?,” Pew Research Center, January 4, 2018, pewresearch.org/internet/2018/01/04/crossing-the-line-what-counts-as-online-harassment Platforms often fail to appropriately moderate abusive content that violates their policies because their moderators lack sufficient context, including cultural and linguistic nuances, to make an accurate judgment.51Oversight Board, “In the 1st case, a FB page posted an edited video of Disney’s cartoon…” Twitter, March 15, 2022, twitter.com/OversightBoard/status/1503702783177940999

Users sometimes decide whether or not to report abuse based on how likely they think the platform is to take content down based on the information they believe is submitted in the report. For example, if abusive content contains a person’s deadname, compromising information, or an extremist dog whistle, but avoids using explicitly abusive terms, a user may choose not to report it because they are unable to provide explanatory context and therefore do not think the platform will understand that a policy has been violated.52Elisa Hansen, interview by Kat Lo, Meedan, January 4, 2022; Sophia Smith Galer, interview by Kat Lo, Meedan, December 20, 2021; Natalie Wynn, interview by Kat Lo, Meedan, December 17, 2021; Mikki Kendall, interview by Kat Lo, Meedan, December 17, 2021; Katherine Cross, interview by Kat Lo, Meedan, December 1, 2021; Corry Will, interview by Kat Lo, Meedan, December 14, 2021; Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021.

One interviewee in Lebanon reported numerous images of two closeted men kissing, which were posted without their consent.53Anonymous interview by Azza El-Masri, University of Texas at Austin, July 5, 2021. The danger of revealing LGBTQ+ identities in Lebanon, where homosexuality is socially taboo and criminalized, is significant.54Afsaneh Rigot, “Digital Crime Scenes: The Role of Digital Evidence in the Persecution of LGBTQ People in Egypt, Lebanon, and Tunisia,” Berkman Klein Center, March 7, 2022, cyber.harvard.edu/sites/default/files/2022-03/Digital-Crime-Scenes_Afsaneh-Rigot-2022.pdf; “Lebanon: Same-Sex Relations Not Illegal,” Human Rights Watch, July 19, 2018, hrw.org/news/2018/07/19/lebanon-same-sex-relations-not-illegal; Jacob Poushter and Nicholas Kent, “The Global Divide on Homosexuality Persists,” Pew Research Center, June 25, 2020, pewresearch.org/global/2020/06/25/global-divide-on-homosexuality-persists However, the interviewee believed the posts would not be seen as violating Facebook’s community standards because content moderators are not likely to be familiar with the regional or cultural context that makes these posts dangerous.55Anonymous interview by Azza El-Masri, University of Texas at Austin, July 5, 2022.

Influencer Corry Will shared a different example illustrating the importance of being able to provide context when reporting abuse. “There was a post with a number that specifically refers to trans suicide rates, that is well-known as a dog whistle, but you can’t rely on the moderator knowing what that means. To them this person just reported a number. If you can add a little context, then you could say ‘hey, this is an absolute dog whistle.’”56Corry Will, interview by Kat Lo, Meedan, December 14, 2021.

TikTok informed us that they “don’t provide details of previous reports or moderation decisions” to their content moderators “as this may unduly bias the reviewer’s decision.”57Email response from TikTok spokesperson, February 7, 2023. While it is understandable to focus moderators on the decision at hand, rather than relitigate past decisions, providing users with space to add context can actually strengthen the ability of moderators to do their jobs more effectively.

There was a post with a number that specifically refers to trans suicide rates, that is well-known as a dog whistle, but you can’t rely on the moderator knowing what that means. To them this person just reported a number. If you can add a little context, then you could say ‘hey, this is an absolute dog whistle.’

There was a post with a number that specifically refers to trans suicide rates, that is well-known as a dog whistle, but you can’t rely on the moderator knowing what that means. To them this person just reported a number. If you can add a little context, then you could say ‘hey, this is an absolute dog whistle.’

Corry Will, educational science influencer

Recommendations

- Allow users to choose between an expedited and a comprehensive reporting option. Users should be able to select which option they prefer at the beginning of the reporting process.

- Expedited Reporting should not require more than two to three clicks to submit.

- Comprehensive Reporting should allow users to add context to explain why something is abusive and violates policies (e.g., if the harassment is part of a coordinated campaign, tied to events happening offline, etc.). This is particularly important in situations where moderators may lack knowledge of or experience with the relevant cultural or regional context.

- Provide prompts to help users understand what kinds of contextual information they need to provide to enable content moderators to assess potential policy violations. Few platforms currently offer users with room to provide context at all and those that do, such as Twitter and YouTube, do so inconsistently and often have open text fields with vague prompts such as “add more context.”

- Provide users with options to block, mute, restrict, or otherwise act on abusive content or accounts at the end of the reporting process. While Twitter, Instagram, and Facebook currently offer users an accessible prompt to block, mute, or restrict an abusive account at the end of the reporting flow, other platforms do not yet do this.

Risks and challenges

Enabling users to provide more detailed contextual information in the reporting process can introduce risks and challenges, which will need to be addressed:

- When users share personal information in the reporting process to provide context for abuse, that can put them at risk. This is a significant concern for people whose professions, identities, and activism make them vulnerable to government surveillance and persecution, particularly when social media companies collaborate with governments or when security breaches leak personally identifiable information to the public.58Jennifer R. Henrichsen, “The Rise of the Security Champion: Beta-Testing Newsroom Security Cultures,” Columbia Journalism Review, April 30, 2020, cjr.org/tow_center_reports/security-cultures-champions.php#flashpoints Meta assured us that they give their reviewers “the minimal and appropriate amount of information needed to make an accurate decision against our policies,” and have “internal security experts” review all content flows “to ensure user privacy and security concerns are addressed.”59Email response from Meta spokesperson, March 17, 2023. We were not, however, able to independently verify this information. It is important that platforms inform users about how the contextual information in their report will be stored, how it will be associated with their account, and what privacy and other relevant policies apply so users can make informed decisions about what personal information they choose to disclose in a report.

- Giving users the ability to provide contextual information as part of the reporting process will create new demands for content moderators. To mitigate the increased volume and strain of evaluating contextual information in reports, platforms could create more structure by offering users specific prompts or more detailed multiple-choice options.

Adapt Reporting to Address Coordinated and Repeated Harassment

Challenges

Harassment is often experienced over an extended period of time; in volume, repeatedly, and/or across multiple platforms, types of media (text, image, video, etc.), and types of interactions (DMs, posts, replies, comments, etc.). Yet reporting processes are typically constructed to respond to a single instance from a single user at a single moment in time.

Reporting systems need to reflect online abuse as it actually manifests for users online rather than forcing users to carry the burden not only of abuse, but of conforming to the limitations of systems not designed to serve them.

Few social media platforms explicitly or comprehensively address the phenomenon of networked or mob harassment. In a study analyzing networked harassment on YouTube, researchers at Stanford University and the University of North Carolina at Chapel Hill defined the tactic as “online harassment against a target or set of targets which is encouraged, promoted, or instigated by members of a network, such as an audience or online community.” They concluded that “YouTube’s current policies are insufficient for addressing harassment that relies on amplification and networked audiences.”60Rebecca Lewis, et al., “‘We Dissect Stupidity and Respond to It’: Response Videos and Networked Harassment on YouTube,” American Behavioral Scientist, 65(5), 735–756, doi.org/10.1177/0002764221989781 Some platforms are starting to fill this gap. For example, in 2021 Facebook launched a new policy to “remove coordinated efforts of mass harassment that target individuals and put them at heightened risk of offline harms,” even if individual pieces of content do not otherwise appear to violate policies.61Antigone Davis, “Advancing Our Policies on Online Bullying and Harassment,” Meta, October 13, 2021, about.fb.com/news/2021/10/advancing-online-bullying-harassment-policies And Discord and Twitch’s policies now include at least some recognition of coordinated abuse.62“Discord Community Guidelines,” Discord, accessed March 2023, discord.com/guidelines; “Community Guidelines,” Twitch, accessed March 2023, safety.twitch.tv/s/article/Community-Guidelines

Even when platform policies do address networked harassment, few platforms give users the opportunity to indicate that they are experiencing coordinated or repeated harassment or to report that kind of harassment in batches, rather than piecemeal. Twitter, it is worth noting, does allow users to report multiple violating tweets in one batch, including those by a single user. In fact, two interviewees independently praised the introduction of these batch reporting features on Twitter and suggested that it would be useful to have similar features available on other platforms.63Corry Will, interview by Kat Lo, Meedan, December 14, 2021; Katherine Cross, interview by Kat Lo, Meedan, December 1, 2021. On YouTube, when users report a channel (rather than an individual video), they can attach multiple videos from that channel to provide context, but they cannot actually report multiple videos at once.64“Report inappropriate videos, channels, and other content on YouTube,” Google, accessed May 2023, support.google.com/youtube/answer/2802027 While enabling users to report multiple pieces of abusive content—including across content types (such as comments, replies, and DMs) and across multiple users—presents real technical and operational challenges, it is absolutely critical in order to align with lived user-experience and facilitate effective content moderation. Reporting systems need to reflect online abuse as it actually manifests for users online rather than forcing users to carry the burden not only of abuse, but of conforming to the limitations of systems not designed to serve them.

Recommendations

- Enable users to submit a single report containing multiple violative pieces of content, across content types such as comments or posts, perpetrated by a single account.

- Enable users to report multiple abusive comments and posts from multiple, separate accounts in a single reporting flow.

- Enable users to indicate within the reporting process that the abusive content they are experiencing seems to be a part of a coordinated or networked harassment campaign and to request that their report be reviewed holistically in that context.

Risks and challenges

It is important to acknowledge that bulk reporting can be weaponized. In formal comments to PEN America, Meta and TikTok acknowledged this reality, but assured us that mass reporting does not have any impact on their content moderation decisions or response times.65Email response from Meta spokesperson, March 17, 2023; Email response from TikTok spokesperson, February 7, 2023. And yet, over the years, there have been ample cases of mass reporting being used maliciously to trigger content takedowns and account suspensions as a tactic to harass and silence.66Brian Contreras, “‘I need my girlfriend off TikTok’: How hackers game abuse-reporting systems,” Los Angeles Times, December 3, 2021, latimes.com/business/technology/story/2021-12-03/inside-tiktoks-mass-reporting-problem; Sam Biddle, “Facebook Lets Vietnam’s Cyberarmy Target Dissidents, Rejecting a Celebrity’s Plea,” The Intercept, December 21, 2020, theintercept.com/2020/12/21/facebook-vietnam-censorship; Katie Notopoulos, “How Trolls Locked My Twitter Account For 10 Days, And Welp,” BuzzFeed News, December 2, 2017, buzzfeednews.com/article/katienotopoulos/how-trolls-locked-my-twitter-account-for-10-days-and-welp; Russell Brandom, “Facebook’s Report Abuse button has become a tool of global oppression,” The Verge, September 2, 2014, theverge.com/2014/9/2/6083647/facebook-s-report-abuse-button-has-become-a-tool-of-global-oppression; Ariana Tobin et al., “Facebook’s Uneven Enforcement of Hate Speech Rules Allows Vile Posts to Stay Up,” ProPublica, December 28, 2017, propublica.org/article/facebook-enforcement-hate-speech-rules-mistakes

Allowing users to report multiple pieces of content and accounts at one time may exacerbate this problem. At present, users who have had their content removed or accounts suspended due to malicious reporting can appeal, though the appeals process can be slow and ineffective. And civil society organizations can also escalate incidents of malicious mass reporting to platforms, though again this process is uneven in its efficacy. But in introducing or strengthening the capacity to bulk report content or accounts, more will need to be done to mitigate the risk of weaponization. Platforms may need to limit how many accounts or pieces of content can be reported at a time, or to activate the bulk reporting feature only when automated systems identify an onslaught of harassment that bears hallmarks of coordinated inauthentic activity. Ultimately, risk assessment, consultation with civil society, and user testing during the design process would reveal the most effective mitigation strategies.

Integrate Documentation into Reporting

Challenges

A critical step for navigating online abuse is documenting that it happened. Documentation enables users to save evidence and share it with their allies and employers, law enforcement, and legal counsel. People experiencing online abuse can lose evidence if the abuser deletes the abusive content or if the content is reported to platforms and removed.

When you have hundreds coming at you and your Twitter feed changes constantly, you can’t keep up with the speed. The [messages] that were problematic in the beginning, you just lose them because you don’t want to scroll through five different pages to find the tweet.

Jin Ding, chief of staff and operations at the Center for Public Integrity

Documentation should dovetail smoothly with the process of reporting abuse to platforms. At present, however, most platforms do not offer any kind of documentation feature, let alone one that is integrated with the reporting process. In fact, users are sometimes hesitant to report abuse because they are afraid of losing evidence of the abusive behavior. “People are concerned that reporting things will actually result in it being deleted from the platform in such a way that they can’t recover it for law enforcement or litigation purposes,” explains Leigh Honeywell, founder and CEO of Tall Poppy. In the absence of better options, Honeywell often recommends informal documentation methods to those facing abuse.67Leigh Honeywell, interview by Kat Lo, Meedan, October 25, 2021.

As a result, users attempting to document abuse often resort to taking screenshots or compiling links in Google Docs68Praveen Sinha, interview by Kat Lo, Meedan, October 13, 2021; Stephanie Brumsey, interview by Kat Lo, Meedan, November 4, 2021., informal methods which may prove inadequate to use as evidence in court or in escalating a case to a social media platform.69Leigh Honeywell, interview by Kat Lo, Meedan, October 25, 2021; Madison Fischer, “Recent Federal Court Ruling on Admissibility of Online Evidence,” American Bar Association, November 30, 2015, americanbar.org/groups/litigation/committees/commercial-business/practice/2015/recent-federal-court-ruling-admissibility-online-evidence/ Furthermore, users not only have to manually report every individual piece of abusive content they receive, but they also have to manually document it, multiplying the steps they have to take to manage abuse. Jin Ding, chief of staff and operations at the Center for Public Integrity, emphasized the challenge of taking these additional steps to document abuse, especially in the face of a cyber mob. “When you have hundreds coming at you and your Twitter feed changes constantly, you can’t keep up with the speed. The [messages] that were problematic in the beginning, you just lose them because you don’t want to scroll through five different pages to find the tweet.”70Jin Ding, interview by Kat Lo, Meedan, December 21, 2021.

Finally, accessible documentation is absolutely critical for newsrooms, publishers, and other institutions to protect the safety of their staff and freelancers and to assess risk. Jareen Imam, currently senior content and editorial manager at Amazon and previously director of social newsgathering at NBC, emphasized the importance of documentation in assessing the potential for escalation to physical attacks. “Documenting this stuff is important because there are sometimes repeated individuals that keep coming back to harass you, and if you don’t have a good sense of who they are, and get some kind of risk assessment, you never know what could happen.”71Jareen Imam, interview by Kat Lo, Meedan, October 29, 2021.

“I don’t know why it’s not rote, built into the systems, that when you press ‘report’ on a comment or a link, that the link or comment isn’t copied and pasted into the report,” says Stephanie Brumsey, a segment producer for MSNBC. “Why is the entire onus on the victim and all the companies that the victims work for?”72Stephanie Brumsey, interview by Kat Lo, Meedan, November 4, 2021.

Recommendations

- Seamlessly integrate documentation into the reporting process. When users report abusive content, the reporting mechanism should give them the option to automatically collect and save all publicly available information about the abusive content or account that they reported (e.g., time and date the abusive comment was made, the account responsible for the abusive comment, the post on which the comment was made, etc.). The documentation could be emailed to users and/or saved in their reporting dashboard (see section 1).

- Enable users to export a structured document with a detailed record and history of the abusive content they reported. Users should be able to share this documentation with their employers, allies, law enforcement, or legal counsel as needed, to show evidence of the harassment they are experiencing.

- Provide API access that allows users to submit reports to social media platforms directly via vetted third-party anti-harassment tools. When people use third-party anti-harassment tools to help with blocking, muting, and documentation (see the case study below), they often have to then separately report the abuse directly on the platform itself. Enabling users to submit reports directly from within trusted third-party tools73 can condense documentation and reporting into a single action, rather than requiring users to do twice the work. Additionally, users should be able to enable third-party tools to capture any additional contextual information that they submitted when reporting abuse so that they do not have to reenter that information manually into the third-party tool.

Why is the entire onus on the victim and all the companies that the victims work for?

Stephanie Brumsey, segment producer for MSNBC

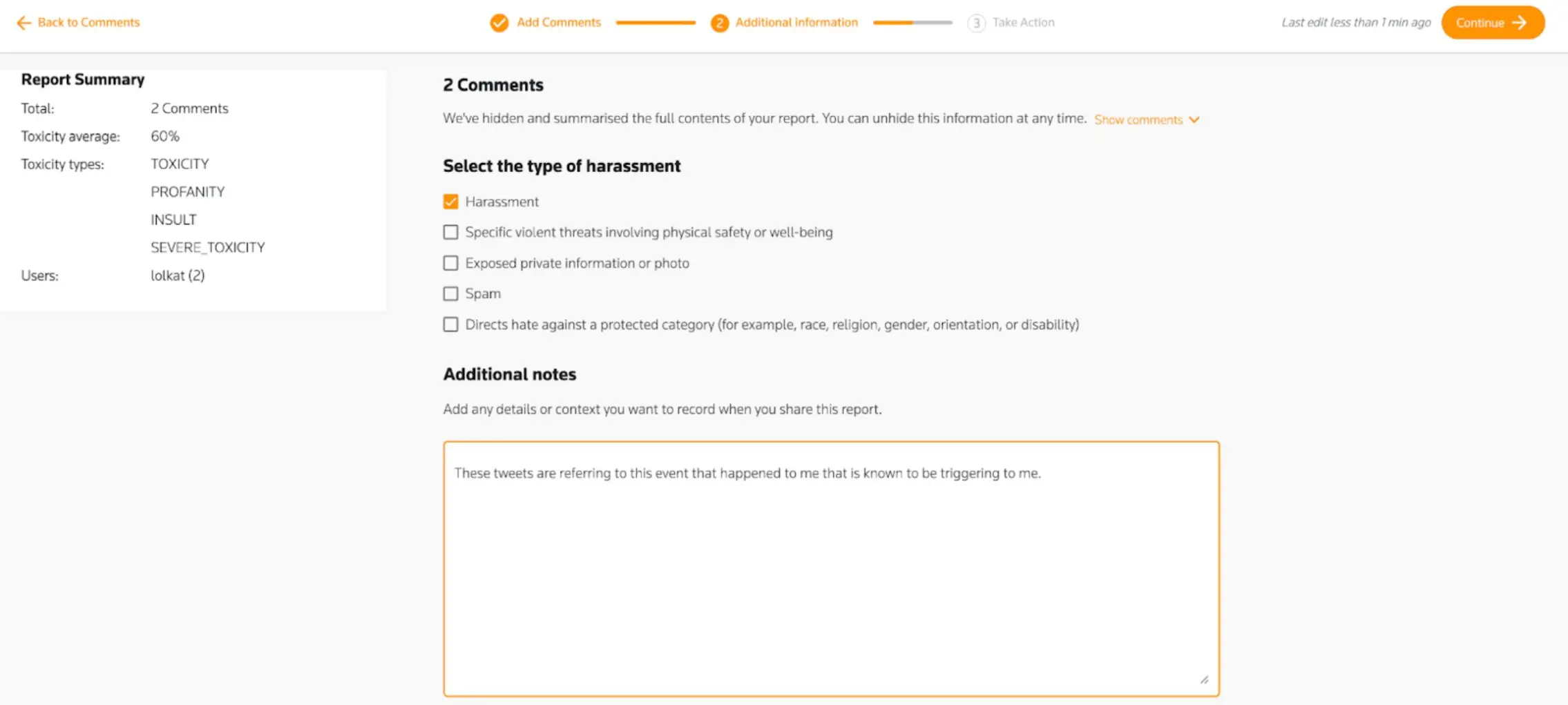

Product case study: TRFilter

In 2022 the Thomson Reuters Foundation, in collaboration with Google’s Jigsaw division, launched a third-party tool called TRFilter to help media professionals manage online abuse on Twitter. TRFilter features a dashboard that enables users to document the harassment that they experience and then export that documentation to share with others. The dashboard uses machine learning to identify and highlight potentially abusive tweets or comments, assigns this content a toxicity rating, and tags this content by type of abusive tactic. Users can then select one or more of these toxic tweets or comments—manually or automatically by type of harassment or toxicity rating—and export them in a single document that packages all the key information. The dashboard has customizable features, allowing users to blur content to reduce exposure and trauma. Previously, users could also bulk block and bulk mute accounts and content from within TRFilter, but due to Twitter’s new API licensing rules,73“Developer Agreement,” Twitter, accessed April 7, 2023, developer.twitter.com/en/developer-terms/agreement users can no longer do so.74Email response from Thomson Reuters Foundation spokesperson, April 21, 2023. From within TRFilter, users also cannot actually report abuse; to do that, users have to log into Twitter. TRFilter is a model for the kinds of tools that platforms could integrate directly into their reporting processes or, at the very least, encourage, integrate, and support.

Make It Easier to Access Support When Reporting

Challenges

Facing an influx of abuse—and reporting all of it—can be isolating, exhausting, and time-consuming. Targets of abuse often seek out and benefit from the support of friends, family, and colleagues to cope with the emotional distress they experience, including having friends read and address abusive content for them.75Jaigris Hodson et al., ”I get by with a little help from my friends: The ecological model and support for women scholars experiencing online harassment,” First Monday 23, no. 8 (2018), doi.org/10.5210/fm.v23i8.9136 In a study of HeartMob, a third-party platform that offers individuals facing harassment the ability to access peer support, users most frequently requested help reporting abuse to social media platforms; and yet, HeartMob users rarely received that type of support because of the lack of integrated allyship reporting options on social media platforms.76Lindsay Blackwell et al., “Classification and Its Consequences for Online Harassment: Design Insights from HeartMob,” Proceedings of the ACM on Human-Computer Interaction 1(2017): 1–19, doi.org/10.1145/3134659 In order to reduce exposure and trauma, people experiencing harassment need to be able to turn to their allies for help reviewing and reporting abusive content.77Jin Ding, interview by Kat Lo, Meedan, December 21, 2021; Stephanie Brumsey, interview by Kat Lo, Meedan, November 4, 2021; Michelle Ferrier, “Attacks and Harassment: The Impact on Female Journalists and Their Reporting,” TrollBusters and International Women’s Media Foundation, September 2018, iwmf.org/wp-content/uploads/2018/09/Attacks-and-Harassment.pdf Azmina Dhrodia, former senior policy manager for gender and data rights at the World Wide Web Foundation, noted the benefit of seeking the support of allies, but pointed out that “It’d be nice to have a way to know that friends are helping you report without having to be in constant conversation across other messaging platforms, which is very time-consuming.”78Azmina Dhrodia, interview by Kat Lo, Meedan, October 8, 2021.

Sophisticated delegation features that enable gated access to a user’s account—so that they don’t need to share account login credentials with allies—have already been implemented for other purposes, including the Teams feature for TweetDeck,79“How to use the Teams feature on TweetDeck,” Twitter, accessed March 2023, help.twitter.com/en/using-twitter/tweetdeck-teams and channel management features on YouTube.80“Add or remove access to your YouTube channel,” Google, accessed March 2023, support.google.com/youtube/answer/9481328 Third-party apps designed to help users navigate online abuse, such as Block Party, have also created sophisticated delegated access features; Block Party’s “Helper” feature, for example, enables users facing abuse on Twitter to designate helpers that can block and mute on their behalf, but cannot post on their account or access their direct messages.81“How it Works,” Block Party, accessed May 30, 2023, https://www.blockpartyapp.com/how-it-works Unfortunately, instead of supporting third-party apps like Block Party and integrating features like delegated access, Twitter and other social media platforms are increasingly making it impossible for such apps and features to survive.82Billy Perrigo, “She Built an App to Block Harassment on Twitter. Elon Musk Killed It,” TIME, June 2, 2023, time.com/6284494/block-party-twitter-tracy-chou-elon-musk/

Finally, it is worth noting that social media platforms sometimes act differently on abusive content that is reported by an ally, as opposed to content reported directly by the impacted user. In some reporting mechanisms, users have to indicate whether they are reporting on behalf of themselves or a third-party. For example, Meta’s policies require users to self-report specific forms of abuse, including “some types of bullying and harassment like unwanted manipulated imagery or positive physical descriptions…because it helps us understand that the person targeted feels bullied or harassed.”83Email response from Meta spokesperson, March 17, 2023, which directly quoted Meta’s policies; “Bullying and Harassment,” Facebook, accessed March 2023, transparency.fb.com/en-gb/policies/community-standards/bullying-harassment Policies that require self-reporting can help prevent deliberate and unintentional misuse of reporting mechanisms, such as allies mistakenly reporting content as abusive because they do not understand its context. However, such policies can also further confuse and burden those facing abuse because users may not understand if and when they can ask their allies for help.84Elisa Hansen, interview by Kat Lo, Meedan, January 4, 2022; Kristiana de Leon, interview by Kat Lo, Meedan, November 30, 2021.

Recommendations

- Enable users to delegate reporting of abusive content to “ally” accounts—trusted friends or colleagues whom they can authorize to report on their behalf. Ensure that users can specify whether they or their delegate will receive notifications on reporting outcomes. Ensure users can provide an end date to delegation authorization and revoke it at any time.

- Communicate clearly to users, in the reporting flow itself, if a report will only be moderated when submitted directly by the target of abuse, rather than by an ally.

- When a user—or their delegated ally—reports abusive content, direct them to a set of resources offering: a) additional support for the targeted user, b) guidance for the ally in supporting the target, and c) resources to help the ally to manage their own well-being (such as hotlines, tip sheets, help centers, etc.).

- Spotlight and fund the work of civil society organizations that provide resources, training, incident response, and hotline support to individuals facing online abuse.

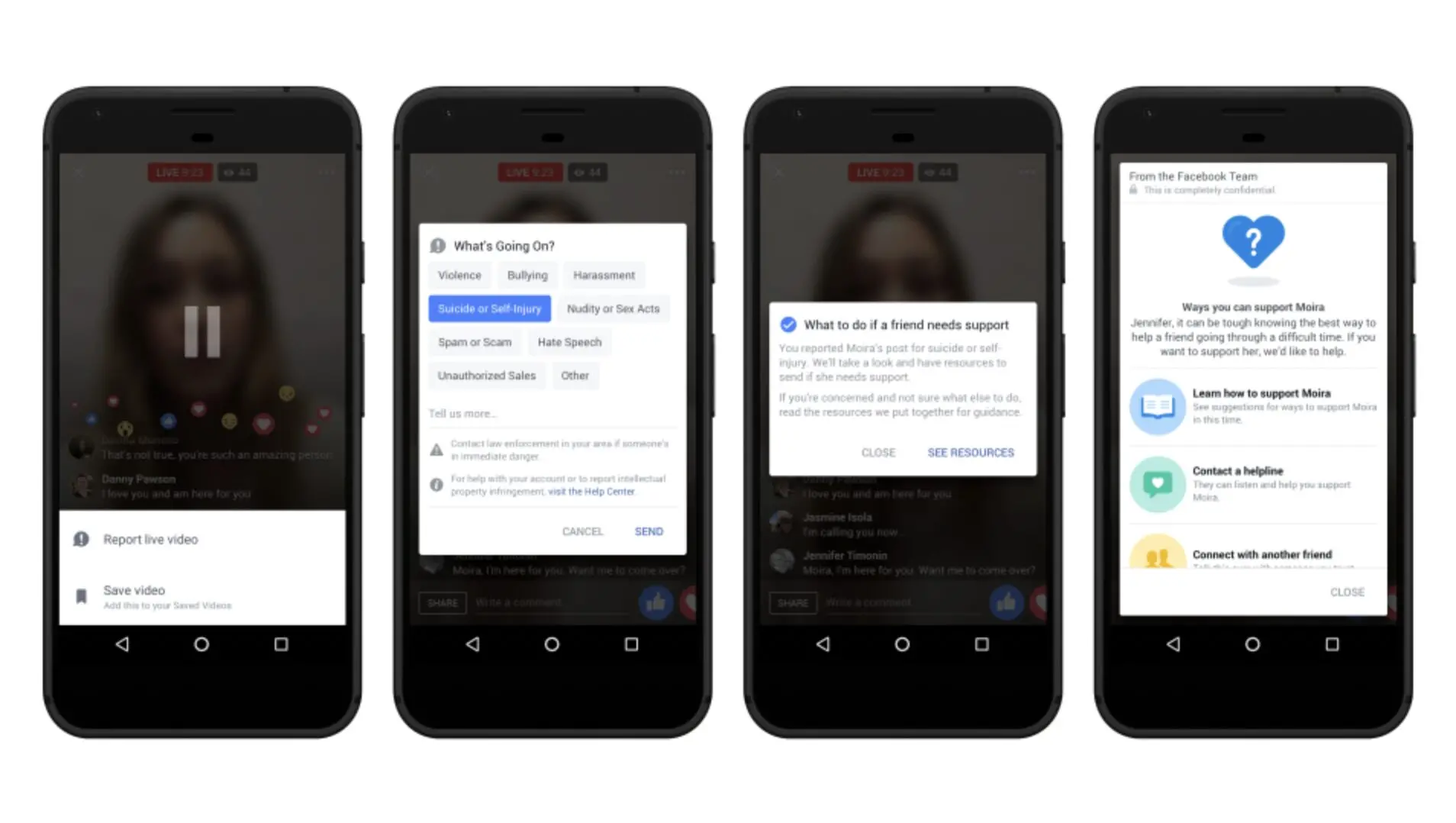

Product case study: Facebook self-harm support screens/pop-ups

On Facebook, when an ally reports content created by another user that seems to indicate or promote self-harm, the ally is shown a support screen that offers guidance and resources on how to help users engaging in or discussing self-harm. This feature, which was introduced in 2017 and is still active today, serves as a model for how reporting mechanisms can provide allies with just-in-time guidance on supporting those facing abuse.

Conclusion

Mechanisms to report abuse to social media platforms are a critical part of the larger content moderation process—and they need significant improvement to better protect users and safeguard free expression online. We need reporting mechanisms that are more user-friendly, accessible, transparent, efficient, and effective, with clearer and more regular communication between the user and the platform.

We need reporting mechanisms that are more user-friendly, accessible, transparent, efficient, and effective, with clearer and more regular communication between the user and the platform.

There have been some improvements to reporting systems in recent years, but these gains are fragile and insufficient. Twitter, for example, has gradually introduced more advanced reporting features, but that progress ground to a halt once Elon Musk bought the platform and—among other actions—drastically reduced the Trust and Safety staff overseeing content moderation and user reporting.85Marianna Spring, “Twitter insiders: We can’t protect users from trolling under Musk,” BBC, March 6, 2023, bbc.com/news/technology-64804007; Barbara Ortutay and Matt O’Brien, “Twitter layoffs slash content moderation staff as new CEO Elon Musk looks to outsource,” USA Today, November 15, 2022; usatoday.com/story/tech/2022/11/15/elon-musk-cuts-twitter-content-moderation-staff/10706732002; Janosch Delcker, “Twitter’s sacking of content moderators raises concerns,” Deutsche Welle, November 16, 2022, dw.com/en/twitters-sacking-of-content-moderators-will-backfire-experts-warn/a-63778330 This pattern is playing out across the industry, with companies such as Meta, Google, and Twitter hiring fewer employees to Trust and Safety teams.86J.J. McCorvey, “Tech layoffs shrink ‘trust and safety’ teams, raising fears of backsliding efforts to curb online abuse,” NBC News, February 10, 2023, nbcnews.com/tech/tech-news/tech-layoffs-hit-trust-safety-teams-raising-fears-backsliding-efforts-rcna69111 At the same time, new platforms and technologies, from Clubhouse and the Metaverse to ChatGPT, are introducing new mediums for abuse, while often failing to implement even the most basic features for reporting.87Queenie Wong, “As Facebook Plans the Metaverse, It Struggles to Combat Harassment in VR,” CNET, December 9, 2021, cnet.com/features/as-facebook-plans-the-metaverse-it-struggles-to-combat-harassment-in-vr; Sheera Frenkel and Kellen Browning, “The Metaverse’s Dark Side: Here Come Harassment and Assaults,” The New York Times, December 30, 2021, nytimes.com/2021/12/30/technology/metaverse-harassment-assaults.html

These inconsistencies across the industry highlight the need for clear standards for minimum viable reporting systems on social media platforms. The protection of users and of free expression online should not be dependent on the decision-making of a single executive or platform. This report maps out how social media companies can collectively begin to reimagine reporting mechanisms to increase transparency, empower users, and reduce the chilling effect of online abuse—but it is up to these companies to take up that challenge.

Methodology

Scope

For this report, PEN America and Meedan set out to better understand how creative and media professionals report online abuse to social media platforms, how reporting mechanisms actually work, and how these mechanisms can be improved. We centered our work on the experiences of those disproportionately attacked online for their identity and profession, specifically writers, journalists, content creators, and human rights activists, especially those who identify as women, LGBTQ+ individuals, people of color, and/or belonging to religious or ethnic minorities.

Interviews were conducted primarily with creative and media professionals based in the United States, as well as several participants based in the United Kingdom and Canada. We fully acknowledge, however, that many of the technology companies analyzed in this report have a global user base, and one of the central challenges to curtailing online abuse is the blanket application of United States–based rules, strategies, and cultural norms globally.88“Activists and tech companies met to talk about online violence against women: here are the takeaways,” Web Foundation, August 10, 2020, webfoundation.org/2020/08/activists-and-tech-companies-met-to-talk-about-online-violence-against-women-here-are-the-takeaways Throughout this report, we strove to take into account the ways that changes to reporting mechanisms on global platforms could play out in regions and geopolitical contexts outside the United States and North America. To that end, we interviewed several experts and creators who work globally, including multiple reporters, activists, and experts based in Lebanon, where Meedan has especially strong partnerships.